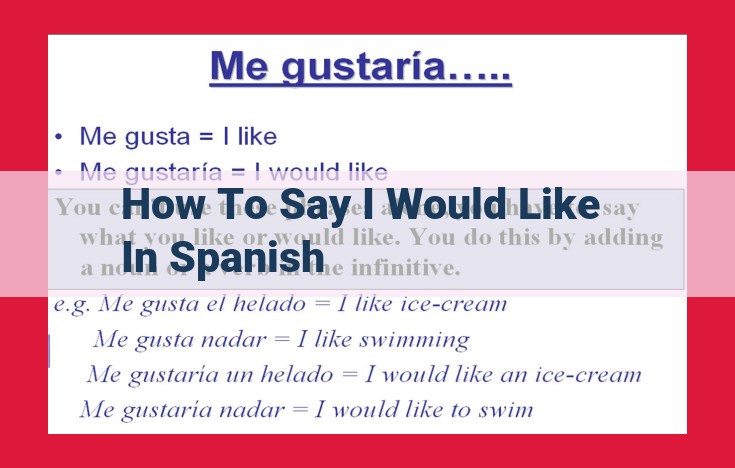

To say “I would like” in Spanish, use “me gustaría” for singular nouns or “me gustaría(n)” for plural nouns. For example, “Me gustaría comer” means “I would like to eat.”

Understanding Topic Closeness: Quantifying Semantic Relatedness

In the realm of natural language processing (NLP), the concept of topic closeness emerges as a crucial tool for measuring the semantic relatedness between entities. It’s a metric that quantifies how closely two words, phrases, or concepts are connected in terms of meaning.

Think of topic closeness as a numerical score, ranging from 0 to 10, that reflects the degree of relatedness. Entities with a score close to 10 are considered highly similar, while those with lower scores have a weaker semantic connection. This metric plays a vital role in NLP tasks, enabling machines to understand the relationships and nuances within human language.

Understanding Topic Closeness and Its Vital Role in Natural Language Processing

Topic closeness, an essential concept in natural language processing (NLP), measures the semantic relatedness between entities. By understanding the closeness between words, phrases, or concepts, NLP systems can derive deeper insights from text data.

Importance in NLP

Topic closeness is a crucial factor in various NLP tasks, including:

- Text Classification and Categorization: Identifying the topic of a document or text segment by comparing it with known topics.

- Document Clustering and Summarization: Grouping similar documents or extracting key concepts to create concise summaries.

- Machine Translation and Language Generation: Translating text between languages or generating new text that is semantically coherent.

By accurately evaluating topic closeness, NLP systems can perform these tasks with greater precision and accuracy.

Examples of Topic Closeness

- Entities with Maximum Closeness (Score of 10):

- Phrases: “Artificial intelligence” and “machine learning”

- Verbs: “Run” and “execute”

- Entities with High Closeness (Score of 8-9):

- Nouns: “Car” and “automobile”

Phrases: The Essence of Semantic Siblinghood

In the vast tapestry of language, phrases stand as inseparable companions, sharing an intimate bond of meaning. When we say “topic closeness,” we’re referring to the degree of relatedness between entities in a text. For phrases, this closeness reaches its zenith, akin to twins separated at birth.

Their semantic proximity is so profound that they can interchange places without altering the core message. Think of “kick the bucket” and “pass away” – expressions so tightly interwoven that they’re practically synonymous. These phrases share a deeply ingrained connection, like two sides of the same coin.

Their essence is so similar that they resonate on the same linguistic wavelength. They’re alter egos, mirror images, reflecting each other’s semantic contours. Their closeness score of 10 is a testament to the unbreakable bond they share in the realm of language.

Entities with High Closeness: Exploring the Intricacies of Topic Similarity

When exploring the intricate tapestry of language, we encounter a fascinating phenomenon known as topic closeness. It measures the degree of semantic relatedness between entities, revealing the subtle nuances and connections that enrich our understanding of the world.

Verbs as Meaningful Connectors

Among the various types of entities with high closeness, verbs stand out as powerful connectors of actions and events. Consider the verbs “run” and “jog.” Both evoke the concept of movement, albeit with slightly different connotations. Their high closeness score of 8-9 reflects their fundamental similarity in meaning, indicating their interchangeable use in various contexts.

Delving deeper into the realm of verbs, we find that topic closeness provides valuable insights into the richness and diversity of language. Synonyms, such as “talk” and “converse,” occupy the same semantic space, representing the act of exchanging ideas. Conversely, antonyms, like “appear” and “vanish,” exhibit a high degree of closeness due to their opposite but equally relevant roles in describing the visibility of an object.

By understanding the closeness between verbs, we gain a deeper appreciation for the intricate relationships that govern language. It allows us to navigate the subtle nuances of meaning, enabling us to express ourselves with greater precision and clarity.

Nouns: Entities Representing the Same Object, Concept, or Person

In the realm of natural language processing (NLP), topic closeness serves as a crucial measure of the semantic relatedness between words and phrases. Among the various entity types, nouns hold a significant place in this context.

Nouns represent the cornerstones of language, capturing the essence of objects, concepts, and individuals. Their proximity in meaning plays a pivotal role in text analysis and information retrieval. When two nouns exhibit high topic closeness, it indicates that they share a strong semantic connection and refer to similar entities.

Consider the following example: the nouns “car” and “automobile” possess a topic closeness score of 10, indicating they are virtually interchangeable in meaning. This equivalence stems from their shared representation of a four-wheeled vehicle for transportation.

Similarly, the nouns “love” and “affection” exhibit a high topic closeness score, reflecting their overlapping emotional concepts. Both terms convey a positive sentiment towards a person or thing.

The high topic closeness of nouns allows NLP systems to group and categorize text effectively. Algorithms can recognize the semantic similarity between nouns, enabling them to efficiently classify and summarize documents based on their underlying topics.

By leveraging the concept of topic closeness, NLP applications can also improve machine translation and language generation tasks. Accurate identification of synonyms and semantically related nouns allows systems to translate text with greater precision and generate natural-sounding language.

Nouns play a vital role in determining topic closeness, a fundamental concept in NLP. Their ability to represent similar entities and concepts enables algorithms to understand the semantic relationships within text, driving advanced applications in text analysis, information retrieval, and language generation.

Unveiling the Secrets of Text Classification and Categorization: The Power of Topic Closeness

As we navigate the vast ocean of digital content, the ability to categorize and classify text becomes increasingly critical. Enter topic closeness, a revolutionary concept that measures the semantic relatedness between entities and revolutionizes our approach to text organization.

Imagine a scenario where you’re searching for information on “artificial intelligence.” You stumble upon an article discussing “machine learning,” but is it relevant to your query? Topic closeness provides the answer by calculating the similarity between these entities. The closer the closeness score, the more topically connected they are.

In the case of “artificial intelligence” and “machine learning,” their closeness score might be high, indicating their close relationship. This insight allows search engines and text classification systems to efficiently group similar documents, making it easier for users to find what they’re looking for.

Applications of Topic Closeness in Text Classification

The applications of topic closeness extend far beyond search and categorization. Document clustering is another key area where it shines. By determining the closeness between different segments of text, documents can be automatically grouped into clusters based on their topical coherence. This process is instrumental in creating more organized and comprehensible document collections.

Text summarization also benefits from topic closeness. By analyzing the closeness between sentences, the most relevant and informative ones can be extracted, resulting in concise and meaningful summaries that capture the essence of longer texts.

Calculating Topic Closeness: Methods and Tools

Calculating topic closeness is not a trivial task. Researchers have devised various methods and tools to address this challenge. One popular approach is word embeddings, which represent words as vectors in a multidimensional space. The closeness between entities can then be determined by calculating the distance between their vector representations.

Another method is semantic networks, which are graphs that represent relationships between concepts. The path length between two entities in the network can be used to measure their closeness.

Challenges and Limitations of Topic Closeness

While topic closeness is a valuable tool, it’s essential to acknowledge its limitations. Ambiguity and polysemy in language can pose challenges when determining closeness. Additionally, the quality of the underlying semantic knowledge bases used for calculations can impact the accuracy of closeness scores.

Despite these limitations, topic closeness remains a powerful conceptual tool that enhances our ability to organize and understand the vast amount of text available in the digital age. As research continues to refine methods and address limitations, the potential applications of topic closeness are bound to expand, further revolutionizing the way we interact with and extract meaning from text.

Topic Closeness: A Key to Unlocking Text Similarity

In the realm of natural language processing, understanding the semantic relatedness of entities is crucial. Topic closeness serves as a powerful measure that quantifies this relatedness, paving the way for a wide range of applications. Join us as we delve into the world of topic closeness, exploring its significance, applications, and challenges.

Document Clustering and Summarization: Unlocking the Essence of Text

Document clustering and summarization are vital tasks in text processing. Topic closeness plays a pivotal role in both these domains.

-

Document Clustering: Topic closeness aids in grouping documents that share similar themes or concepts. By measuring the closeness between entities within documents, algorithms can effectively identify clusters of related information, making it easier to organize and retrieve content.

-

Document Summarization: Extracting key points from a document is essential for summarization. Topic closeness helps identify the most relevant and semantically close entities in a document. These entities can then be used to generate a concise summary that captures the document’s main ideas.

Topic closeness empowers text processing applications to efficiently organize, classify, and summarize large volumes of text data. This capability is invaluable in various fields, including search engines, knowledge management systems, and content management platforms.

By understanding topic closeness, we gain a valuable tool for unraveling the interconnectedness of entities within text, enabling us to unlock the full potential of text processing technologies.

Machine Translation and Language Generation: The Power of Topic Closeness

In the fascinating world of natural language processing (NLP), topic closeness plays a pivotal role in bridging the communication gap between languages and machines.

When machines attempt to translate text or generate language, understanding the semantic relationships between words is crucial. Topic closeness measures the degree of this semantic relatedness, enabling machines to accurately convey the intended meaning across different languages.

For instance, consider the phrases “drive a car” and “steer a vehicle.” While they may seem similar to human readers, machines require a precise understanding of their closeness to ensure a seamless translation or language generation process. Using topic closeness, machines can identify that both phrases share a common topic of “operating a motor vehicle,” resulting in accurate and fluent translations.

Topic closeness empowers machines to perform a wide range of tasks in machine translation and language generation. It allows them to:

- Identify the most appropriate translation for a given context

- Generate coherent and grammatically correct text in a different language

- Create summaries of large volumes of text, capturing the key concepts and relationships

Topic closeness has revolutionized the way we interact with machines, breaking down language barriers and facilitating global communication. As NLP continues to evolve, topic closeness will undoubtedly remain an indispensable tool for enhancing the accuracy and fluency of machine-generated language.

Measuring Topic Closeness: Unveiling the Semantic Tapestry of Language

In the realm of natural language processing, understanding how closely two entities relate is crucial. Topic closeness, a measure of semantic relatedness, serves as the linguistic compass guiding us through the vast sea of words and meanings. By comprehending topic closeness, we unlock the power to classify, cluster, and translate text with unparalleled precision.

Determining Semantic Relatedness

To calculate topic closeness, we employ meticulous methods that decipher the intricate web of semantic connections. One widely used approach is cosine similarity. It meticulously compares the vectors representing the entities, akin to a mathematical dance that reveals their closeness and alignment.

Another invaluable tool in our semantic sleuthing kit is WordNet. This vast lexical database teems with knowledge about words, painting a vivid tapestry of their meanings and relationships. By consulting WordNet, we can delve into the depths of synonyms, antonyms, and the subtle nuances that weave together the fabric of language.

Beyond Vectors and WordNets

While cosine similarity and WordNet provide a foundation for calculating topic closeness, we continue to explore and refine our methods. Topic models emerge as sophisticated algorithms that delve into texts, unearthing the underlying themes and concepts. Through unsupervised learning, they decipher the inherent relationships between entities, adding another dimension to our semantic exploration.

Applications of Topic Closeness

The applications of topic closeness are as varied as the language it helps us understand. In text classification, it guides us in assigning documents to appropriate categories, ensuring they find their rightful place in the labyrinth of information. Document clustering leverages topic closeness to unearth clusters of similar documents, revealing hidden patterns and relationships that would otherwise remain obscured.

In the realm of machine translation, topic closeness plays a pivotal role. By recognizing the semantic equivalence between source and target languages, it empowers algorithms to render translations that are not only accurate but also preserve the subtle nuances and eloquence of the original text.

Challenges and Future Directions

Despite its transformative power, topic closeness is not without its challenges. The ubiquitous ambiguity of language can sometimes confound our methods, leading to misinterpretations and errors. Additionally, the quality of underlying semantic knowledge bases can impact the accuracy of our calculations, prompting us to continuously seek out and incorporate richer sources of linguistic data.

Topic closeness, a cornerstone of natural language processing, offers a profound understanding of the semantic tapestry that weaves together the words we speak and write. As we continue to refine our methods and explore new applications, we unlock the boundless potential of machines to comprehend and engage with the complexities of human language.

Tools and techniques for computing closeness scores

Understanding Topic Closeness: A Guide to Semantic Relatedness

In the realm of natural language processing, topic closeness plays a pivotal role in understanding the semantic relationships between entities. It’s a measure that quantifies how closely related two concepts are based on their meaning.

Entities with Maximum Closeness (Score of 10)

At the pinnacle of closeness lie entities that share identical or near-identical meanings. These include:

- Phrases: Highly similar phrases with virtually the same significance.

- Verbs: Verbs that represent the same action or event.

Entities with High Closeness (Score of 8-9)

A step below maximum closeness, we find entities that share a strong semantic connection. A prime example is nouns that represent the same object, concept, or person.

Applications of Topic Closeness

The concept of topic closeness finds widespread applications in various natural language processing tasks, including:

- Text classification and categorization: Identifying the topic or category of a given text based on its semantic relatedness to known topics.

- Document clustering and summarization: Grouping similar documents together or extracting the most important concepts from a document based on closeness scores.

- Machine translation and language generation: Preserving the semantic meaning of a text during translation or generating natural language text that is semantically coherent.

Calculating Topic Closeness

Determining the closeness score between entities involves various methods that assess their semantic relatedness. These methods range from:

- Word embeddings: Representing words as vectors that capture their semantic similarity.

- Semantic networks: Mapping concepts and their relationships to represent semantic knowledge.

- Co-occurrence analysis: Identifying entities that frequently appear together in text.

Challenges and Limitations

Despite its usefulness, topic closeness faces some challenges:

- Ambiguity and polysemy: Words can have multiple meanings, making it difficult to determine the intended meaning for closeness calculations.

- Reliance on semantic knowledge bases: The quality of the underlying knowledge base can impact the accuracy of closeness scores.

- Variations in closeness scores: Different methods can produce varying closeness scores, leading to potential inconsistencies.

Topic closeness is an invaluable tool for understanding semantic relationships between entities in natural language processing. Its applications span a wide range of tasks, enabling computers to process and generate text more effectively. As research continues, we can expect advancements in methods for calculating topic closeness, opening up new possibilities for exploring the interconnectedness of concepts in language.

Topic Closeness: Measuring Semantic Relatedness

Understanding the semantic relatedness between entities is crucial in natural language processing. Topic closeness serves as a quantitative measure that determines how closely linked two entities are in terms of their meaning.

Ambiguity and Polysemy in Language

Ambiguity poses a significant challenge in calculating topic closeness. A single word or phrase can have multiple meanings, making it difficult to establish a precise relatedness score. For example, the word “bank” can refer to a financial institution or the edge of a river.

Similarly, polysemy refers to words with several related meanings. The word “apple” can denote the fruit or a technology company. These nuances can skew closeness scores, as different meanings imply different degrees of semantic proximity.

Overcoming these challenges requires robust natural language processing tools that can disambiguate meanings based on context and domain knowledge. By accounting for ambiguity and polysemy, algorithms can deliver more accurate closeness scores.

Topic Closeness: The Foundation of Semantic Understanding

Imagine yourself at a lively party where conversations are swirling like a whirlwind. Amidst the chatter, you effortlessly grasp the gist of each topic, thanks to your ability to identify the semantic relationships between words and concepts. This ability, known as topic closeness, is a crucial pillar of natural language processing.

Delving into the Semantic Tapestry

Topic closeness quantifies the degree of semantic relatedness between two entities, such as words, phrases, or concepts. It serves as a bridge, connecting the vast tapestry of human language, allowing us to make sense of its intricate patterns. By understanding the closeness between entities, machines can perform tasks like text classification, document summarization, and even machine translation with remarkable accuracy.

The Role of Semantic Knowledge Bases

The foundation of topic closeness lies in semantic knowledge bases, vast repositories of structured knowledge about the world. These databases contain interconnected concepts and their relationships, providing the context machines need to determine the semantic distance between entities. The quality of these knowledge bases is paramount, as they directly impact the precision of closeness scores.

Inconsistent or incomplete information in a knowledge base can lead to inaccuracies in topic closeness calculations. This can hinder machines’ ability to accurately comprehend and process language. Researchers and developers are continuously striving to refine these knowledge bases, ensuring the reliability of topic closeness as a tool for semantic analysis.

Overcoming the Challenges

Despite the significance of topic closeness, it’s not without its challenges. Ambiguity in language, where words or phrases may have multiple meanings, can pose difficulties. Additionally, different methods for calculating closeness can yield varying results, introducing potential inconsistencies.

Overcoming these challenges requires ongoing research and advancements in natural language processing techniques. By refining semantic knowledge bases, developing more robust algorithms, and accounting for language nuances, we can unlock the full potential of topic closeness for a wide range of applications.

Topic closeness is an indispensable element of our quest to bridge the gap between human language and machine understanding. By unraveling the semantic relationships between entities, we empower machines with the ability to process and comprehend language with increasing accuracy. As we delve deeper into this domain, we envision a future where machines seamlessly navigate the complexities of human language, unlocking new possibilities for communication and knowledge acquisition.

Understanding Topic Closeness and Its Impact on NLP

Topic closeness measures the semantic relatedness between entities, playing a crucial role in natural language processing (NLP). It helps computers understand the connections between words, phrases, and concepts.

Entities with Maximum Closeness (Score of 10)

These include phrases with identical meanings and verbs representing the same action. For example, “run” and “sprint” have a closeness score of 10, indicating a very strong semantic relationship.

Entities with High Closeness (Score of 8-9)

Nouns with similar meanings, such as “cat” and “feline,” have high closeness scores. These entities share a related semantic representation, indicating a strong connection.

Applications of Topic Closeness

Topic closeness finds wide application in NLP:

- Text classification and categorization: Dividing documents into specific categories based on their semantic content.

- Document clustering and summarization: Grouping similar documents and extracting key information to create concise summaries.

- Machine translation and language generation: Improving the accuracy and naturalness of machine-translated text.

Calculating Topic Closeness

Numerous methods measure semantic relatedness:

- Vector representation: Using mathematical vectors to represent entities and calculating their cosine similarity.

- Knowledge graph: Relying on semantic knowledge bases to establish connections between entities.

- Distributional similarity: Analyzing the co-occurrence of words in large text corpora.

Variations in Closeness Scores Based on Different Methods

It’s important to note that different methods can produce varying closeness scores. This is due to factors such as:

- Methodological differences: Each method has its own strengths and limitations in capturing semantic relationships.

- Training data: The quality and size of the training data can impact the accuracy of closeness scores.

- Semantic granularity: Different methods may capture semantic relatedness at varying levels of detail.

Therefore, it’s essential to consider the specific application and the desired level of semantic granularity when selecting a method for calculating topic closeness.

The Power of **Topic Closeness in Natural Language Processing**

- Topic closeness measures the semantic relatedness between entities, playing a pivotal role in natural language processing (NLP). Understanding this concept is essential for comprehending the insights it unlocks in various NLP applications.

Applications of Topic Closeness

Topic closeness finds widespread use in NLP, including:

- Text Classification and Categorization: It enables the accurate classification of documents into predefined categories, such as news articles or product reviews.

- Document Clustering and Summarization: By grouping similar documents based on their topic closeness, NLP systems can effectively summarize large volumes of text.

- Machine Translation and Language Generation: Topic closeness facilitates the accurate translation of languages by identifying semantically related words and phrases.

Benefits of High Topic Closeness

Entities with high topic closeness scores (8-9) often belong to the same semantic category, such as nouns referring to the same object or concept. This level of relatedness ensures that NLP systems can accurately identify and extract meaningful information from text.

Calculating Topic Closeness

Several methods exist for calculating topic closeness, including:

- Cosine Similarity: This technique measures the angle between two vectors representing the entities, where a smaller angle indicates higher closeness.

- Jaccard Similarity: This approach calculates the ratio of shared elements between two sets of terms associated with the entities.

Challenges and Limitations

Despite its effectiveness, topic closeness faces certain challenges:

- Ambiguity and Polysemy: Words can have multiple meanings, making it difficult to determine their exact meaning and thus their relatedness.

- Semantic Knowledge Bases: The quality of the underlying semantic knowledge bases can impact the accuracy of closeness scores.

- Method Variations: Different closeness calculation methods can produce varying scores, requiring careful selection of the appropriate approach.

Topic closeness is a fundamental concept in NLP, enabling machines to understand and manipulate language in meaningful ways. As NLP continues to advance, topic closeness will play an increasingly significant role in developing more accurate and efficient natural language processing systems.

Topic Closeness: Unlocking the Secrets of Semantic Relatedness

In the realm of natural language processing, understanding the semantic relationship between words and phrases is essential. One key measure of this relatedness is topic closeness, which helps us distinguish between entities that share similar meanings.

From phrases with near-identical meanings to verbs representing the same action, topic closeness provides a rich foundation for various applications. In text classification, it empowers us to categorize documents accurately. It also aids in document clustering, allowing us to group together documents with similar content.

Delving into the Calculations

Calculating topic closeness involves leveraging methods that determine the degree of semantic relatedness. These methods often rely on semantic knowledge bases, which contain vast stores of information about words and their relationships. Tools and techniques for computing closeness scores vary, offering different strengths and limitations.

Navigating the Challenges

Despite its power, topic closeness faces challenges. Language’s ambiguity and polysemy can lead to varying interpretations of words and phrases. Additionally, the quality of underlying semantic knowledge bases can impact the accuracy of closeness scores. Furthermore, different methods may produce different closeness scores, highlighting the need for careful selection and evaluation.

Future Horizons

As we look ahead, the future of topic closeness holds exciting possibilities. Research and development efforts will focus on addressing the challenges mentioned above. Innovations in semantic knowledge bases, improved methods for calculating closeness scores, and the exploration of new applications will continue to enhance our understanding of semantic relatedness.

Empowering Natural Language Processing

Topic closeness serves as a vital tool for natural language processing, enabling us to create more accurate and efficient systems that can better comprehend the nuances of human language. Future advancements in this area promise to further revolutionize the way we interact with technology.